I knew the end of Agile was coming when we started using miniature sand clocks. Every morning, at precisely nine o’clock, the team of developers and architects would stand around a room paneled in white boards and would begin switching the sand clock.

When was your turn to switch it, you were supposed to launch into the litany:

Forgive me, Father, for I have sinned. I only wrote two modules yesterday, for it was a day of meetings and fasting, and I had a dependency upon Joe, who’s out sick this week with pneumonia.

The scrum master, the one sitting down while we were standing, would duly note this in Jira, then would intone, “You are three modules behind. Do you anticipate that you will get these done today?”

“I will do the three modules as you request, scrum master, for I have brought down the team average and am now unworthy.”

“See that you do, my child, for the sprint end on next Monday, and management is watching”

The sand clock would then get passed on to the next developer, and like nervous monks, the rest of us would breathe a sigh of relief when we could hand off the damned glass sand clock to the next poor schmuck in line.

This was no longer a methodology. It had become a religion, and like most religions it really didn’t make that much sense to the outsider — or even to the participants, when it got right down to it.

One day the clock felt on the floor and got broken, maybe by accident…

Small is Good

The Agile Manifesto, like most such screeds, began with a great concept. The underlying premise was straightforward: big groups of people working on software projects were not required to complete them. Extra people, beyond a certain amount, only added to the communication and slowed down a project.

Many open source projects that did really cool things were done by small development teams of between a couple and twelve people, with the ideal size being about seven.

When your team was that small, design could practically be done as a group activity.

Two weeks seemed to be an acceptable amount of time to demonstrate substantial development.

Meetings are kept brief, and having the customer present keeps them informed.

The alternative — “Waterfall methodology” meant that a client would often have to wait six months to see the product, and the unveiling at the end of that phase usually ended up with the customer hyperventilating in the corner somewhere.

Agile was hip, it was cool, and it involved Fibonacci Numbers. What was not to like about that?

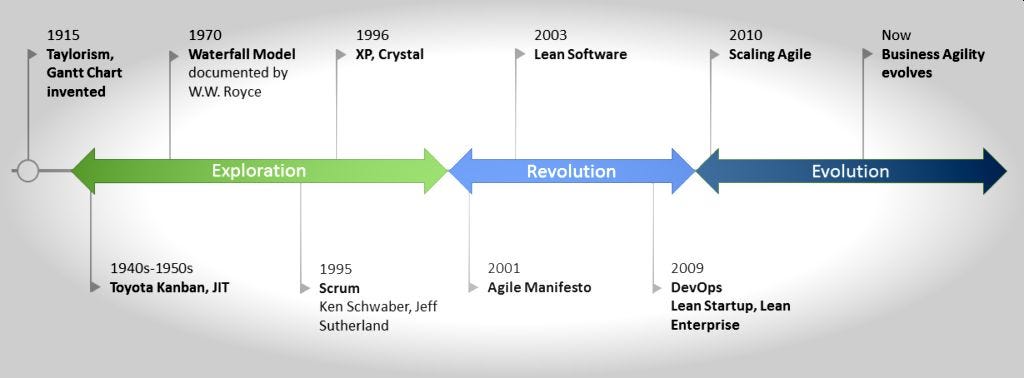

The History of AGILE

Over the years, I began to realize a subtle but very important distinction. The Agile Manifesto got it wrong from the start: small teams didn’t work better because they could use a lean and mean methodology to complete a project. Rather, small teams on short projects allowed a lean and mean methodology to be followed with any chance of success.

Becoming Agile

At the time Agile was introduced, the typical software project fit inside the framework of what Agile excels at. Most were web-based, with a web interface available in a matter of days. They used databases to store state, and the web developer typically had unrestricted access to that database. They were projects that took four to six months to complete (eight to twelve two-week sprints), and they were primarily client facing (both in the sense that user interfaces were an important part of the experience, and in the sense that the client could see changes happening practically before her eyes).

This was about the time that the concept of a minimal viable product (MVP) began to take hold — the notion that, beyond the first couple of sprints, the product would be useful even if development stopped right then, for some arbitrary definition of useful.

Needless to say, businesses began to take note, and becoming Agile soon became the mantra of the week.

Agile evolved from a rough manifesto to a formal methodology in which a project manager (now known as a scrum master) collaborated with managers to create “stories” that described what they wanted their product to accomplish (those things were once known as requirements), and “tasks” that became the steps necessary to complete those stories, and that formed the contract between the manager (via the scrum master) and the developer or designer.

Within this framework, a dance should evolve in which the overall structure of the application, then successive levels of detail, and finally implementation, play a role.

In theory, by tracking this information, one may determine whether a project is behind schedule and, if so, allocate additional resources to shore up the issue area.

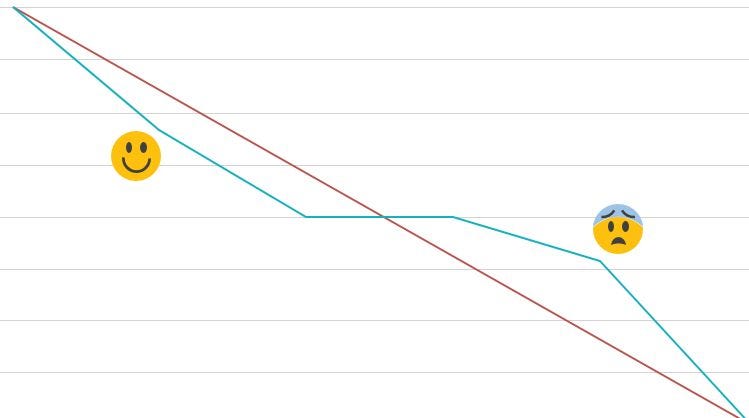

The scrum burndown chart

Again, from a business standpoint, this is a significant victory. Software projects are inherently terrifying for managers because you are investing a significant sum of money with little confidence that you will get any return on that investment, thus seeing red, yellow, and green colored boxes appear on a chart can be reassuring.

Where Agile Fails

The problem, of course, is in the details — and in the nature of human behavior.

Most project management is based on the idea that tasks can be measured, based on the metrics that other people are using to measure the same task.

Building an assembly line is very predictable (at least in the old economy), and because it’s done so many times, you can usually figure out how long it’ll take to do it, usually within a few days.

But creating software isn’t so predictable. In most cases, it’s cheaper (if not always better from an organizational point of view) to buy off-the-shelf software, even if the prices are high. It’s because the functionality you need already exists, and you’ve already paid the price for building the app the first time.

Task estimation in Software Development

How long does it take to build login functionality?

It takes about an hour to code up the user interface.

It can take thirty minutes to code in the back end … or thirty days.

If you want full integration with your Active Directory authentication system on a non-standard platform that only supports LDAP, and you want to integrate a two factor email authentication system into the mix, then the UI is the least of your worries.

There is a fallacy in computer programming circles that all applications are ultimately decomposable — that is to say, you can break down complex applications into many more simple ones.

In reality, you often can’t get more complex behaviors to work until you have the right combination of components in place, and even then, you’ll run into issues with synchronization of data availability, memory usage and deallocation, and race conditions — issues that will only become apparent after you’ve built the majority of the plumbing.

Thus it scale?

This is why the phrase “but will it scale?” has entered the vernacular of programmers worldwide. Scale issues appear only after you’ve almost completely developed the system and attempted to make it perform under more extreme settings.

The solutions often entail scrapping significant parts of what you’ve just built, much to the consternation of managers everywhere. This is one of the reasons why developers really hate having to put any numbers on tasks if they can help it.

It also suggests that Agile does not always scale well. Integration dependencies are often not tracked (or are subsumed into hierarchical stories), yet it tends to be one of the most variable aspects of any software development.

Realistically, this is not so much a fault with Agile as it is with its most common tools. Technically speaking, such a project diagram is a graph of information, not just a tree. You have dependencies in space, time, organization, abstraction and complexity, and estimating time to complexity is often the weakest link throughout these tools. On the other hand, this effort is also made worse when you have too many people involved in the projects, because the complexity of managing such projects increases geometrically with time.

Complex organizations

One consequence of this approach as well is that, with large teams, the amount of time involved in planning can often consume as much as a quarter of the overall time available for development.

Another is that the constant emphasis on minimum viable product means that at any given point developers end up spending a significant percentage of their time building and demonstrating their work to date, eating up another ten to fifteen percent of their available time, often for code that will be thrown away.

Because this essentially leaves a “week” of development time in what amounts to a two week sprint, this also has the drawback of limiting what you can accomplish in that sprint to only the most bare bones components possible.

Excess of meetings

This is especially true once you shave off another a day for scrum meetings. They are intended to go only fifteen minutes, but the reality is that they can easily go on and on when there are problems. Spreading out sprints to four weeks makes more sense, but in practice few organizations adopt that.

One other side effect of these meetings is on managers, who by their very roles are often involved at scrums at multiple levels in their organizations, which means that this leaves them with little time to actually lead at a strategic level and forces them to micromanage, typically with lackluster results.

Manpower consulting firms are frequently the most enthusiastic about Agile, despite the fact that their goal on any project is to maximum the number of developers and support staff employed on projects as possible.

This is ironic, because what happens is that Agile is thus employed most heavily in operations where classical Waterfall methodology, with an emphasis on precise specifications and detailed pre-planning, would actually be preferential.

Data Projects and the Post Agile World

Scrum Target failures

Aside from all of this, it should be emphasized that traditional Agile is ineffective for some types of projects. For various reasons, enterprise data projects, in particular, do not meet the requirements for being suitable Agile candidates:

– Enterprise data systems (EDS) place a high value on data modeling, which can range in complexity from a few days to months, depending on the data sources and the scale of the company.

– EDS initiatives primarily concentrate on queries, transformations, and data transportation (ingestion and services), none of which are client-facing.

– In contrast to time-boxed application development, EDS projects are typically ongoing and necessitate a combination of automated data ingestion and active data curation.

To be honest, while there have been a few development approaches in the corporate knowledge space, the domain itself is still young enough that no single methodology has established itself for enterprise data systems in the same way that Agile has for application development. This is not surprising given that the emphasis on Enterprise Data is relatively recent.

Data Modeling Paradigma

A key facet of enterprise data projects comes not in the technical integration of pipes between systems, but in the mapping of data models from one system to another, either by curation or machine learning.

In other words, the kind of work that is being done is shifting from an engineering problem (dedicated short term projects intended to connect systems) to a curational one (mapping models via minimal technical tools).

The end of app development

This transition also points to what the future of Agile will end up being. In many respects we’re leaving the application era of development — applications are thinner, mostly web-based, where connectivity to both data sets and composite enterprise data will be more important than complex client-based functionality. This is also true of mobile applications — increasingly, smart phone and tablet apps are just thin shells around mobile HTML+CSS, a sea-change from the “there’s an app for that” era.

The client as a relatively thin endpoint means that the context for which Agile first developed and is best suited — stand-alone open source programs — is dwindling. Today, the average application is more likely a data stream of some kind, with the value being in the data itself rather than the programming, making programming significantly simpler (and with a far broader array of current tools) than it was twenty or even ten years ago.

The category of games may be the last major holdout for such applications, and even there, the emergence of a few consistent tool-sets like the Unreal Engine means that there’s increasing convergence on the technical components, with Agile really only living on in areas like design and media creation.

The Post-Agile Era

What that points to in the longer term is that work methodologies are moving towards an asynchronous event model where information streams get connected, are mapped and then are transformed into a native model in unpredictable fashion. We release platforms, then “episodes” of content, some as small as a tweet, some gigabyte sized game updates. While aspects of Agile will remain, the post-Agile world has different priorities and requirements, and we should expect whatever paradigm finally succeeds it to deal with the information stream as the fundamental unit of information.

Conclusions

So, Agile is not “dead”, but it is becoming ever less relevant. There’s something new forming (topic for another post, perhaps), but all I can say today is that it likely won’t have sand clocks.

#agile #innovation #methodologies #data #waterfall #sdlc